Submit using markov as the assignment name. Submit a README.txt and all .java files -- don't submit .class files.

MarkovModel using

your code from lab.

MapMarkovModel class that generates text more

efficiently by utilizing a HashMap to map from a k-character key (i.e., k-gram) to a list of every k-gram that follows key in the training text.

WordMarkovModel class that will generate a

random text by selecting random sequences of words

represented by the WordNgram class. You will

complete the WordNGram class.

MarkovModel and MapMarkovModel

implementations while varying the different parameters (k-gram length,

length of

training text, and number of characters generated). Time

your code WordMarkovModel with different

implementations of hashcode in

WordNgram. Report your results in your README.txt.

makeNGram method. The method is passed a value for the k in k-gram,

and the length of the text to generate randomly. The function returns a new String

constructed based on k-gram frequencies in

myString. Note that you should use a StringBuilder to generate the random text because a StringBuilder can append characters in time proportional to the number of characters appended, while concatenating a String takes time proportional to the length of the resulting String.

Random object used for random-number

generation that is constructed thusly:

Using the seed 1234 to initialize the random-number stream ensures that the same random numbers are generated each time you run the program. Removing RANDOM_SEED and usingprivate static final int RANDOM_SEED = 1234; // code omitted myRandom = new Random(RANDOM_SEED);

new Random() will

result in a different set of random numbers, and thus different text,

being generated each time you run the program. This is more amusing, but

harder to debug. If you use a seed of 1234 in your smart/Map

model you should get the same random text as when the brute-force method

is used. This will help you debug your program because you can check

your results with those of the code you're given which hopefully you can

rely on as being correct.

Once you have implemented your random text generator, you should time it varying different parameters:

MapMarkovModel that

extends MarkovModel. You only really

need to create a constructor and a new makeNGram method

in MapMarkovModel. Otherwise, your class will

inherit the behavior of MarkovMode.

As the writeup describes, you'll use a map instead of re-scanning the text for every randomly-generated character. To test that your code is doing things faster and not differently you can use the same text file and the same markov-model. If you use the same seed in constructing the random number generator used in your new model, you should get the same text, but your code should be faster. You'll need to time the generation for several text files and several k-orders and record these times with your explanation for them in the README you submit with this assignment.

myWords.

You'll need to ensure that .hashCode, .equals,

.compareTo and .toString work properly and

efficiently. You'll probably need to implement additional methods to

extract state (words) from a WordNgram object. In my code,

for example, I had at least two additional methods to get information

about the words that are stored in the private state of a

WordNgram object.

To facilitate testing your .equals and

.hashcode methods a JUnit testing program is

provided. You should use this, and you may want to add more tests

to it in testing your implementation.

Testing with JUnit shows that a method passes some test, but the test

may not be complete. For example, your code will be able to pass

the

tests for .hashCode without ensuring that objects that

are equal yield the same hash-value. That should be the case, but

it's not tested in the JUnit test suite you're given.

To test your WordNgram class you're given testing code.

This code tests individual methods in your class, these tests are

called unit tests and so

you need to use the standard JUnit

unit-testing library with the

WordNgramTest.java

file to test

your implementation.

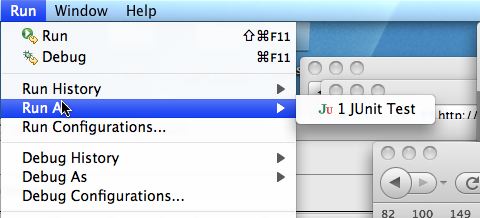

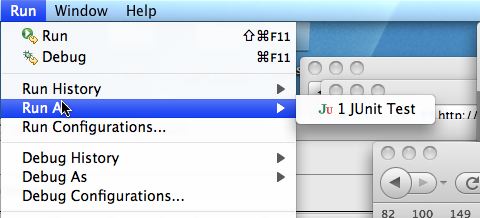

To choose Run as JUnit test first use the Run As option in the Run menu as shonw on the left below. You have to select the JUnit option as shown on the right below. Most of you will have that as the only option.

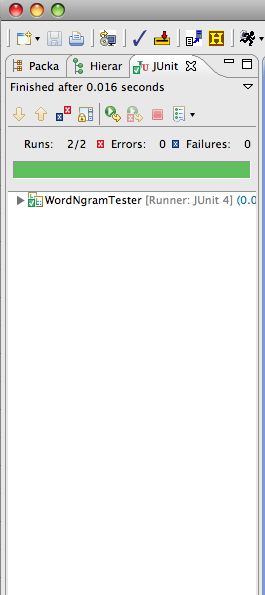

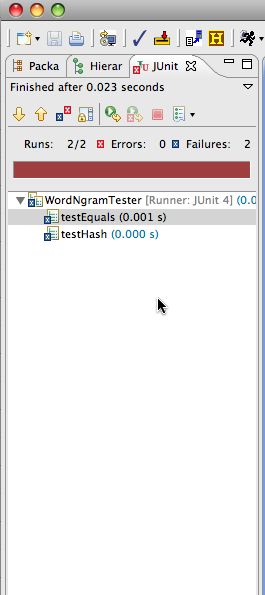

There are two tests in

WordNgramTest.java: one for the

correctness of .equals and one for the "performance"

of the .hashCode method.

If the JUnit tests pass, you'll get all green as shown on the left below. Otherwise you'll get red -- on the right below -- and an indication of the first test to fail. Fix that, go on to more tests. The red was obtained from the code you're given. You'll work to make the testing all green.

|

|

WordMarkovModel that

extends the abstract class AbstractModel class. This

should be very similar to the MapMarkovModel class you

wrote, but this class uses words rather than characters.

A sequence of characters was stored as a String in the code for character-oriented Markov models. For this program you'll use ArrayLists (or arrays) of Strings to represent sequences of words used in the model.

The idea is that you'll use 4-words rather than 4-characters in

predicting/generating the next word in an

order-4 word based Markov Model. You'll need to construct the

map-based WordMarkovModel and implement its methods so that

instead of generating 100 characters at random it generates 100 words at

random (but based on the training text it reads).

To get all words from

a String use the String split method which returns an

array. The regular expression "\\s+" represents any whitespace,

which is exactly what you want to get all the words in file/string.

WordNgram object, is mapped to

a list of WordNgram objects --- specifically the n-grams

that follow it in the training text. This is exactly what your

MapMarkovModel did, but it mapped a String to a list

of Strings. Each String represented a sequence of k-characters. In

this new model, each WordNgram represents a sequence

of k-words. The concept is the same.

WordMarkovModel code you write you'll

conceptually replace Strings in the map with WordNgrams. In the code you

wrote for maps and strings, calls to .substring will be

replaced by calls to new WordNgram. This is because

.substring creates a new String from parts of another and

returns the new String. In the WordMarkovModel code you

must create a new WordNgram from the array of strings, so

that each key in the word-map, created by calling new, corresponds to a

string created in your original program created by calling substring.

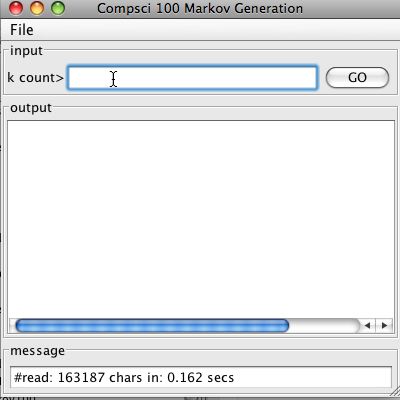

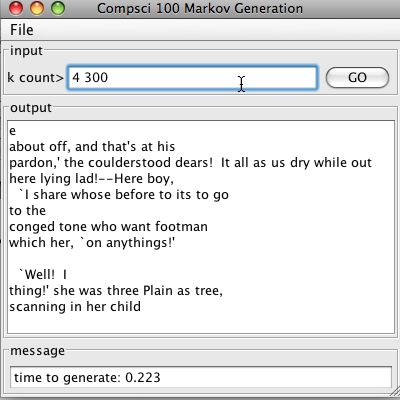

After you snarf the assignment you should run MarkovMain to use the brute-force Markov generator. The GUI associated with the program is shown below.

You use the File menu to browse and select a data file as the training text. There is a data directory provided when you snarf containing several files you can use as training texts when developing your program.

On the left is a screen shot of the program just after alice.txt has been loaded. On the right is a screen shot after generating 300 random characters using an order-4 Markov model with Alice in Wonderland as the training text.

|

|

initialize method of the model is called with a

Scanner from which characters and words are read. The code you have

already reads all the characters into a string and uses this string to

generate characters "at random", but based on their frequency in an

order-k Markov model.

process method is called when the user presses the

GO button or the return/enter key in the text field of

the GUI. The convention in this program is that the Object

passed to process is a String that contains

space-separated numbers representing the order k of the

Markov model and the number of letters to generate (or number of

words). See the existing code for examples.

messageViews with a String, or showViewsError

to with a String, respectively. You can either use

messageViews, this.messageViews or

super.messageViews since the abstract class from which your

model inherits contains these methods.

update or super.update with a

String. The update method in the views displays

a string. To clear the output in the views, call

super.clear (or clear). To display

multiple lines, either construct one string to pass to

update or call update repeatedly

without clearing.

In the code

you're given in MarkovModel

the call below sends the built-up randomly-generated String to

the views --- see method brute in the code. The

code

uses a StringBuilder for efficiency in constructing the

character-by-character randomly-generated text.

You can modify MarkovMain to use your model by simply changing one line.

WordNgram for word Markov

models. For that modification you'll create a new class named

WordMarkovModel that you use in main. But the

word-markov-models depend on the WordNgram class you must

develop and test.