First, we iterate through every file in the Shakespeare collection, converting the text to lowercase and removing punctuation. Next, we initialize TfidfVectorizer. In particular, we pass the TfIdfVectorizer our own function that performs custom tokenization and stemming, but we use scikit-learn's built in stop word remove rather than NLTK's. Then we call fit_transform which does a few things: first, it creates a dictionary of 'known' words based on the input text given to it. Then it calculates the tf-idf for each term found in an article.

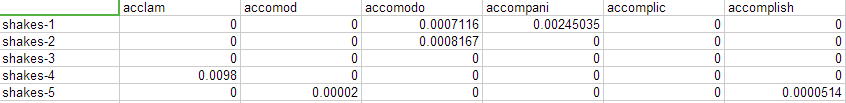

This results in a matrix, where the rows are the individual Shakespeare files and the columns are the terms. Thus, every cell represents the tf-idf score of a term in a file.

tfidf

The table represents a sample tf-idf entry from the Shakespeare files. In general, these should be small (or 0 if the term isn't present in the document).